While AI bubble talk fills the air these days, with fears of overinvestment that could pop at any time, something of a contradiction is brewing on the ground: Companies like Google and OpenAI can barely build infrastructure fast enough to fill their AI needs.

During an all-hands meeting earlier this month, Google’s AI infrastructure head Amin Vahdat told employees that the company must double its serving capacity every six months to meet demand for artificial intelligence services, reports CNBC. The comments show a rare look at what Google executives are telling its own employees internally. Vahdat, a vice president at Google Cloud, presented slides to its employees showing the company needs to scale “the next 1000x in 4-5 years.”

While a thousandfold increase in compute capacity sounds ambitious by itself, Vahdat noted some key constraints: Google needs to be able to deliver this increase in capability, compute, and storage networking “for essentially the same cost and increasingly, the same power, the same energy level,” he told employees during the meeting. “It won’t be easy but through collaboration and co-design, we’re going to get there.”

It’s unclear how much of this “demand” Google mentioned represents organic user interest in AI capabilities versus the company integrating AI features into existing services like Search, Gmail, and Workspace. But whether users are using the features voluntarily or not, Google isn’t the only tech company struggling to keep up with a growing user base of customers using AI services.

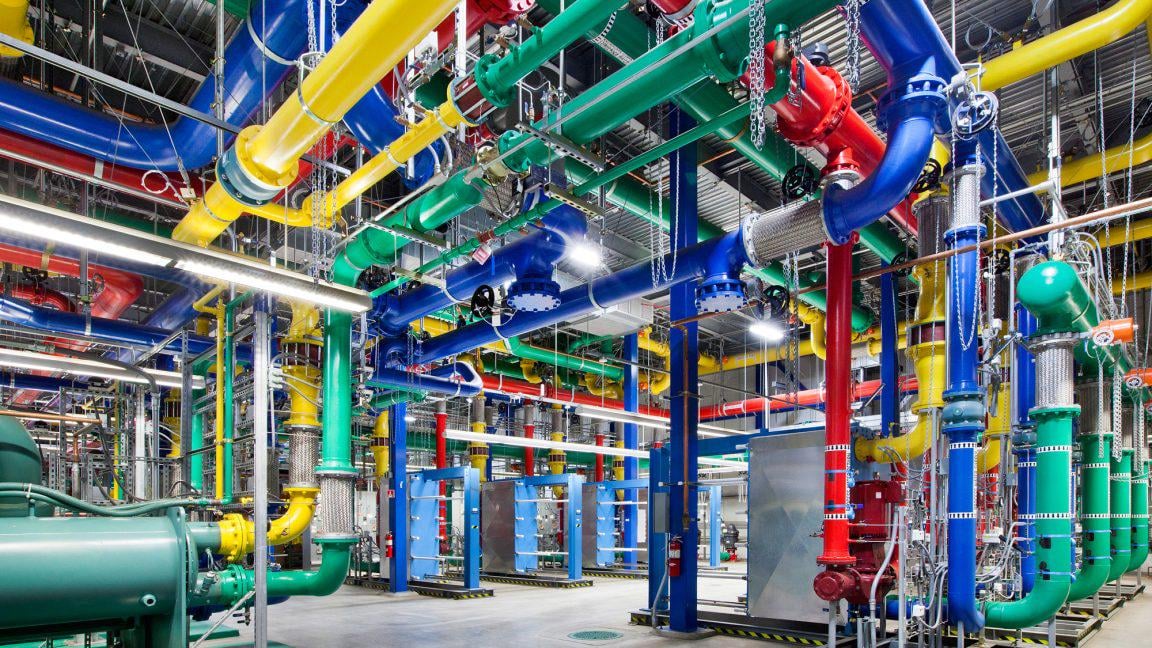

Major tech companies are in a race to build out data centers. Google competitor OpenAI is planning to build six massive data centers across the US through its Stargate partnership project with SoftBank and Oracle, committing over $400 billion in the next three years to reach nearly 7 gigawatts of capacity. The company faces similar constraints serving its 800 million weekly ChatGPT users, with even paid subscribers regularly hitting usage limits for features like video synthesis and simulated reasoning models.

“The competition in AI infrastructure is the most critical and also the most expensive part of the AI race,” Vahdat said at the meeting, according to CNBC’s viewing of the presentation. The infrastructure executive explained that Google’s challenge goes beyond simply outspending competitors. “We’re going to spend a lot,” he said, but noted the real objective is building infrastructure that is “more reliable, more performant and more scalable than what’s available anywhere else.”

The thousandfold scaling challenge

One major bottleneck for meeting AI demand has been Nvidia’s lack of capacity to produce enough GPUs that accelerate AI computations. Just a few days ago during a quarterly earnings report, Nvidia said its AI chips are “sold out” as it races to meet demand that grew its data center revenue by $10 billion in a single quarter.

The lack of chips and other infrastructure constraints affects Google’s ability to deploy new AI features. During the all-hands meeting on November 6, Google CEO Sundar Pichai cited the example of Veo, Google’s video generation tool that received an upgrade last month. “When Veo launched, how exciting it was,” Pichai said. “If we could’ve given it to more people in the Gemini app, I think we would have gotten more users but we just couldn’t because we are at a compute constraint.”

At the same meeting, Vahdat’s presentation outlined how Google plans to achieve its massive scaling targets without simply throwing money at the problem. The company plans to rely on three main strategies: building physical infrastructure, developing more efficient AI models, and designing custom silicon chips.

Using its own chips means Google does not need to completely rely on Nvidia hardware to build out its AI capabilities. Earlier this month, for example, Google announced the general availability of its seventh-generation Tensor Processing Unit (TPU) called Ironwood. Google claims it is “nearly 30x more power efficient” than its first Cloud TPU from 2018.

Given widespread acknowledgment of a potential AI industry bubble, including extended remarks by Pichai in a recent BBC interview, the aggressive plans for AI data center expansion reflect Google’s calculation that the risk of underinvesting exceeds the risk of overcapacity. But it’s a bet that could prove costly if demand doesn’t continue to increase as expected.

At the all-hands meeting, Pichai told employees that 2026 will be “intense,” citing both AI competition and pressure to meet cloud and compute demand. Pichai directly addressed employee concerns about a potential AI bubble, acknowledging the topic has been “definitely in the zeitgeist.”

Google says AI demand forcing 2x capacity growth every 6 months as it targets 1000x scale in 5 years

byu/callsonreddit inwallstreetbets

Posted by callsonreddit

35 Comments

This is only the first inning of AI.

Google won the ai race 🫡

[deleted]

Priced in

> Companies like Google and OpenAI can barely build infrastructure fast enough to fill their AI needs.

But how does this relate to profitability?

> Google needs to be able to deliver this increase in capability, compute, and storage networking “for essentially the same cost and increasingly, the same power, the same energy level,” he told employees during the meeting. “It won’t be easy but through collaboration and co-design, we’re going to get there.”

Wait, what? Lmao.

So now we engineer the sentiment to bullish?

AI demand from companies that haven’t shown a single way to profit off it yet … remind me what happened when the internet was invented.. the exact same thing?

For what though

Ai is not a fucking bubble

Microsoft, Google, Amazon, Meta, Tesla/xAI, soon Apple all hire the absolute smartest minds on the planet to work for them. And they’ve all collectively decided to invest a shit ton of money into AI, and they’re only increasing their spend every quarter

Meanwhile WSBers: omg there’s a bubble, omg they’re so dumb for spending so much and we’re smart!!!

humans must build AI infrastructure for AI needs ..

So we’re like between Terminator 1 and 2?

I have a feeling Google better working on AI than messaging apps

So buy companies like ECG.

One thing is for sure, the eu was right they are NEVER going to catch up in the cloud compute industry lmaoo.

Look at the price per task of the new Gemini. They are getting linear gains in performance with exponential increases in cost.

It’s a bubble because the revenue has to increase like a moon rocket (to service the debt) and they’re still selling hopes and dreams when it comes to value delivered to customers.

When companies start asking “what is the payoff for all this AI spend” the successful ones are going to answer “we replaced some engineer headcount with AWS and we’ll see a return on investment in 30 years.” The unlucky ones will botch everything with AI and end up reverting to a commit from 5 years ago.

All the current success stories are high valuations based on exuberance or they are downstream from the regarded levels of capex.

If/when this AI bubble crashes, Apple is going to be sitting pretty good.

So where is all the water and power gonna come from for this magical bullshit? And who is it gonna service if everyone is replaced by AI?

Its always been Google

Wow, its almost like the the top engineers, computer scientists, and business’s in the world realize how transformative ai will be.

Exactly… AI is demanding resources to do more AI. I can’t be the only person that understands this.

Scary shit

Yup, AI demand is EXTREME. Until it is running every NPC in every video game, and every person has their personal assistant…. and every business is running various models and teams of agent / accountants….. and every security camera is running things through an intelligent AI that really KNOWS that area and what is normal and not…..

Things won’t be slowing down any time soon. The demand is just insane.

This seems like a piece to address or adjust to the incoming bubble.

Sure there is huge demand now but will it remain once the actual cost is passed to consumers? Once it isn’t a loss leader I think a lot of people will drop it due to sticker shock

https://preview.redd.it/h6714vlhxv2g1.png?width=1024&format=png&auto=webp&s=a87827ada4b2768a55b2aa75c5ab474d29145fb6

What about water?

Waiting for one of these datacenters to get hijacked and used to brute force some crypto private keys.

STOP I can only be so ERECT!

Energy will be the ultimate bottleneck at the end of the day… look at nuclear, especially after the recent beatdown.

$AVGO 🎉🎉🎉

I do believe AI demand is increasing and infrastructure can’t follow.

But do they make money of the AI services they provide?

I don’t remember paying for Gemini or ChatGPT.

So, the discussion of AI being a bubble isn’t saying there isn’t demand for it on the ground. It’s that AI fever isn’t manifesting significant income or improvements that justify the massive expenditure of money.

It’s effectively companies shifting the money around in a circle buying components and building data centers to pay for more production etc. The actual money coming from outside the loop is a pittance compared to the money being dumped into the AI black hole.

https://preview.redd.it/59wxj2hq0w2g1.jpeg?width=600&format=pjpg&auto=webp&s=d44be474d8e5ba7e8e74ccf7a24c24c284c2bc27

Is demand *truly* doubling every 6 months, or is this “demand” just the result of Google forcing an AI answer down everybody’s throat for every search they make?

I, for one, hate the option and wish it could be disabled.

Lots if demand for same cost?

So its not revenue generating but just maintaining